Mixture PK-YOLO

Brain tumor detection using YOLO-based model

This project is built based on the paper

Abstract

- MRI slices

- Axial, coronal, and sagittal imaging views

- PK-YOLO

- Detect brain tumors from 2D multiplanar MRI slices

- The backbone is pretrained only in axial views

- Backbone extracts same axial-biased features for all plane (axial, coronal, and sagittal)

- PK-YOLO uses SparK RepViT as main backbone

- SparK pretraining strengthens feature extraction, especially axial

- Input: 640x640 MRI slices from different planes such as Axial, Coronal and Sagittal

- Auxiliary CBNet acts as an extra gradient branch that improves feature quality

- Final detection is performed by YOLOv9

- Achieve the highest performance among DETR and YOLO based in the original study

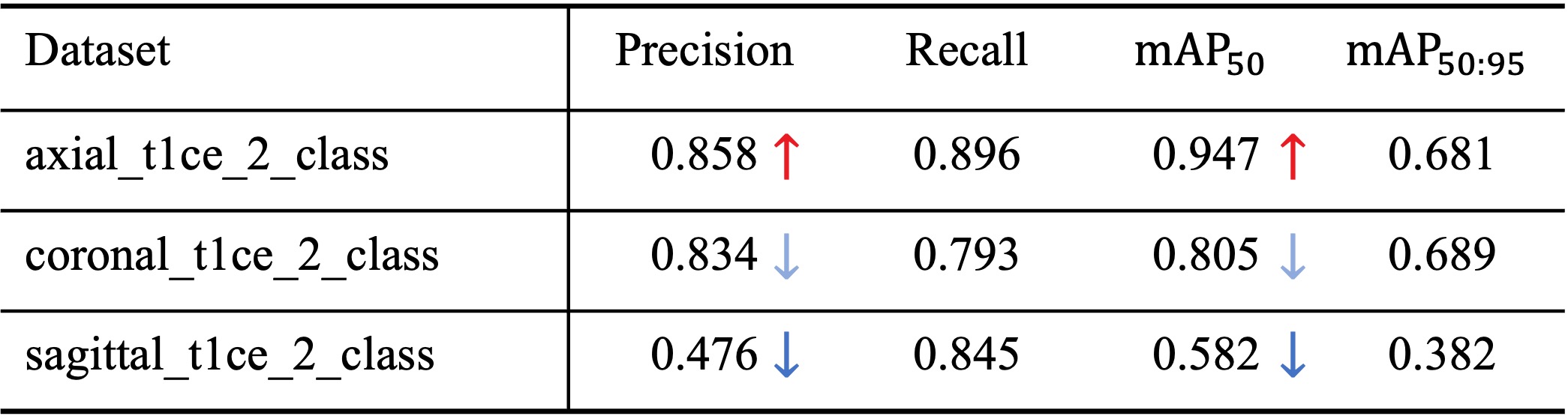

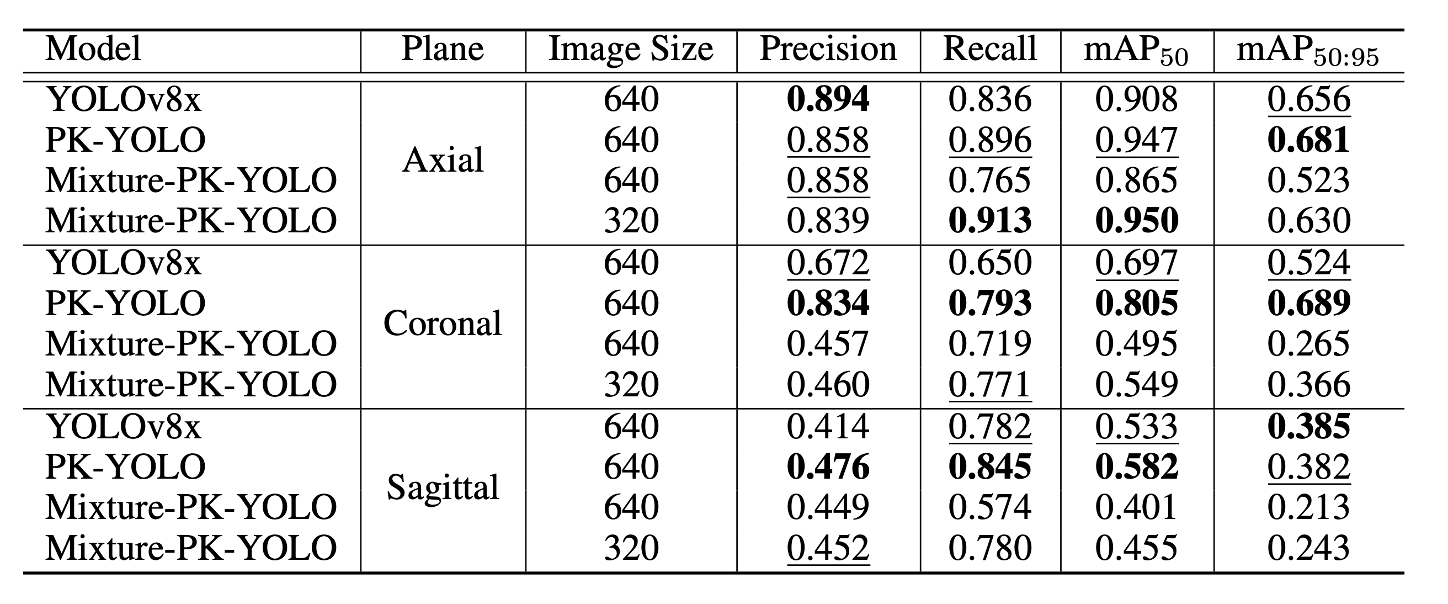

Limitations of the Previous Work

- Pretraining on a single plane data

- Axial-biased features

- Same features are applied to other planes

- Large performance gap

- Axial \(mAP_{50} \rightarrow 0.947\)

- Coronal \(mAP_{50} \rightarrow 0.805\)

- Sagittal \(mAP_{50} \rightarrow 0.582\)

Related Work

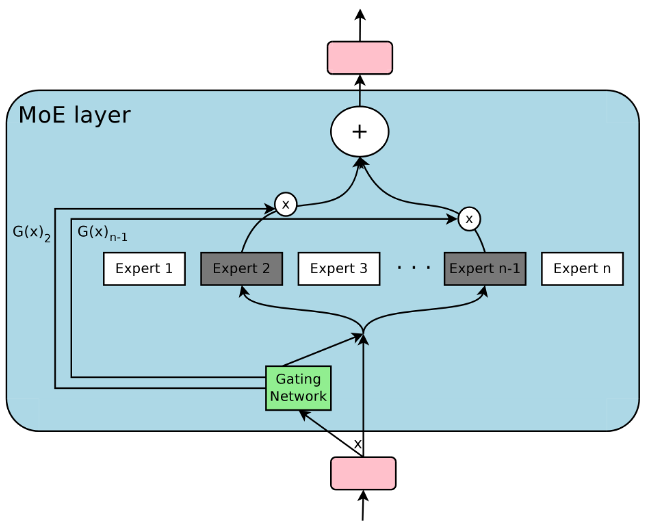

Mixture-of-Experts (MoE)

MoE

- Each model acts as an expert

- Gating layer to combine outputs into one

- Advantages

- Computational efficiency

- Scalability

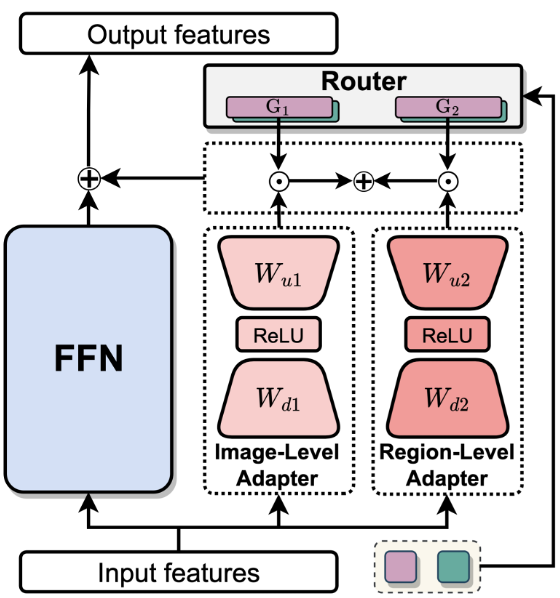

LION

LION

- Router adjusts the ratio between image-level and region-level knowledge

- Demonstrates how a router weighs two features.

For more information, refer to this paper review post.

Method

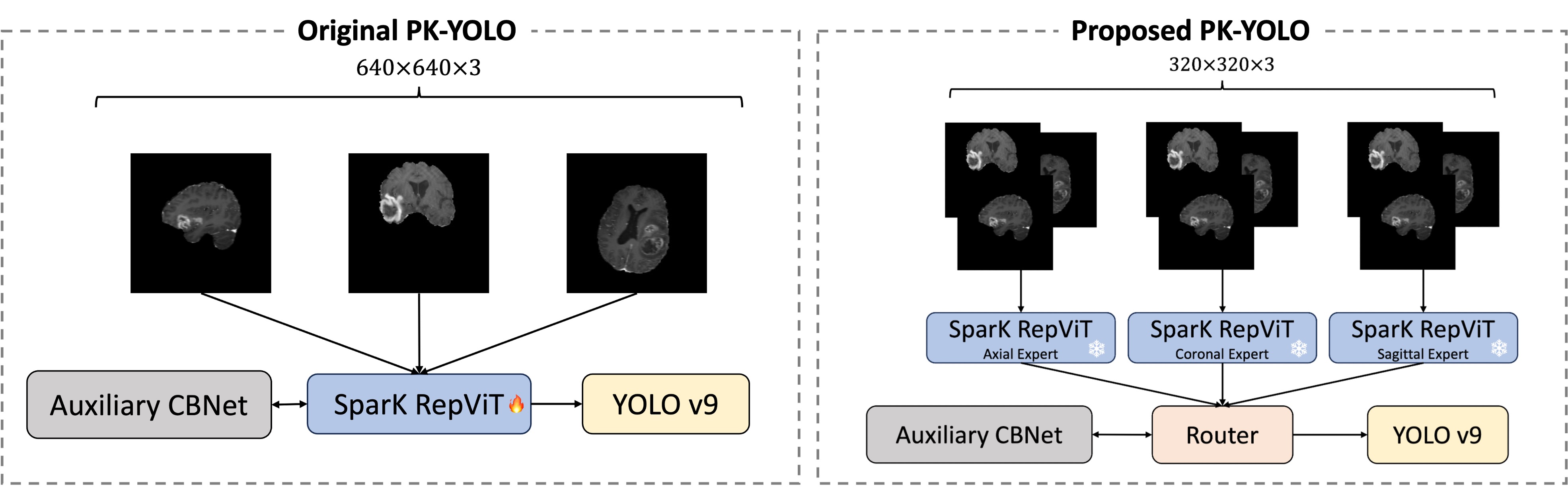

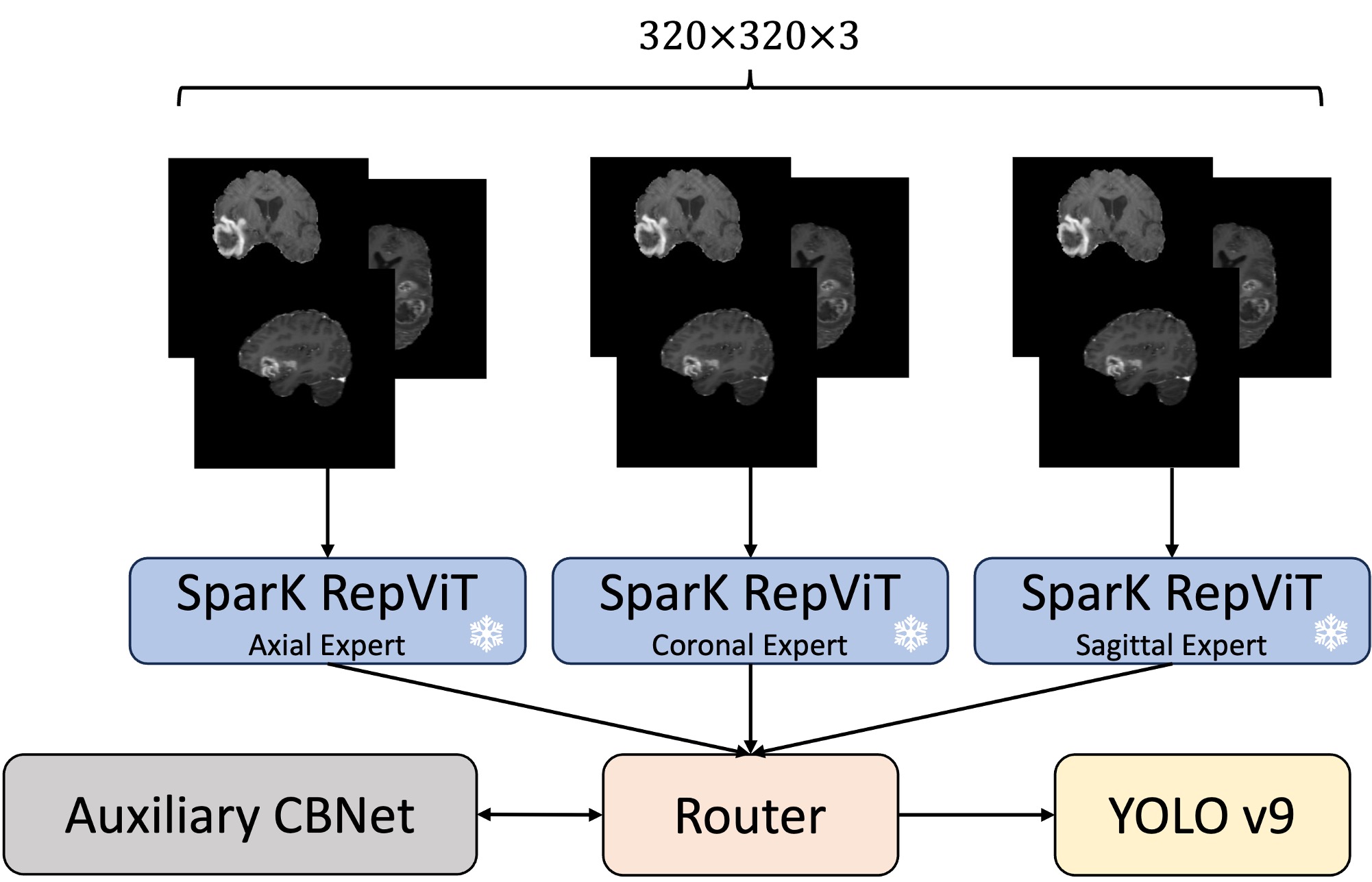

Building on the limitations and insights from related work, the solution to the single backbone architecture seems straightforward. The figure here provides a direct one-to-one comparison between the original PK-YOLO model and our proposed Mixture PK-YOLO model. The main difference between these two architectures is that our model uses three separate backbones, one for each plane, instead of relying on a single pretrained backbone. Additionally, we introduce a router module right after the backbone outputs to effectively combine the plane-specific features.

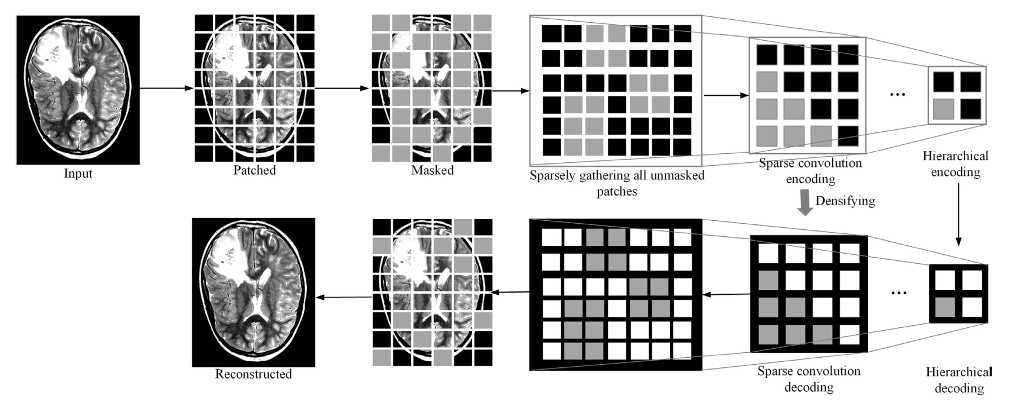

SparK Pretraining

The pretraining process is done using the SparK

After the sparse convolutions, unmasked patches are fed into an encoder, followed by a densifying step. The final output is a reconstructed image where the model has learned to infer the masked patches.

Through this SparK pretraining process, the backbone model is equipped with general knowledge of brain tumor image, then used in PK-YOLO architecture for tumor detection.

Model Architecture

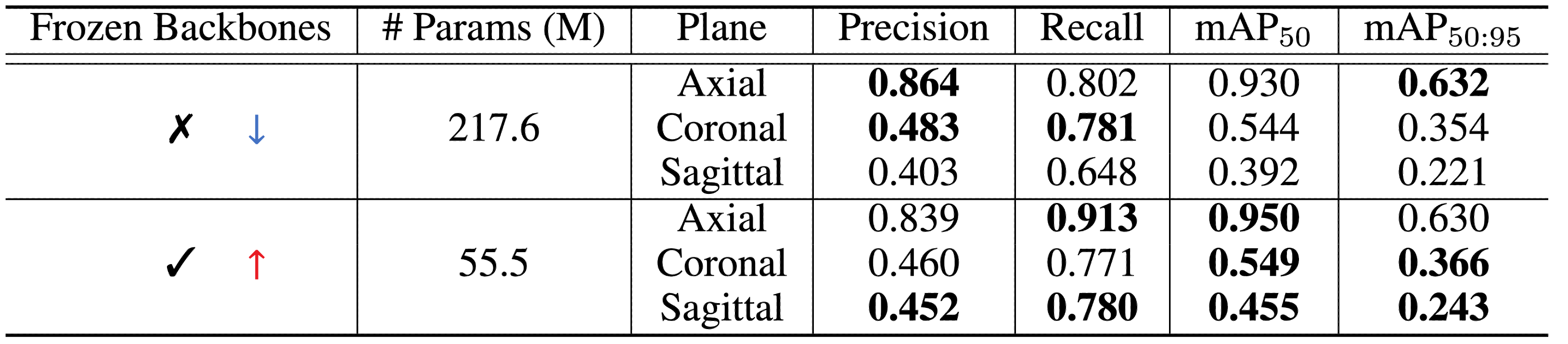

- Employs three pretrained backbone models

- Treats each pretrained backbone as an expert in each plane

- Freeze all three backbones

- Router module fuse three outputs from the backbones

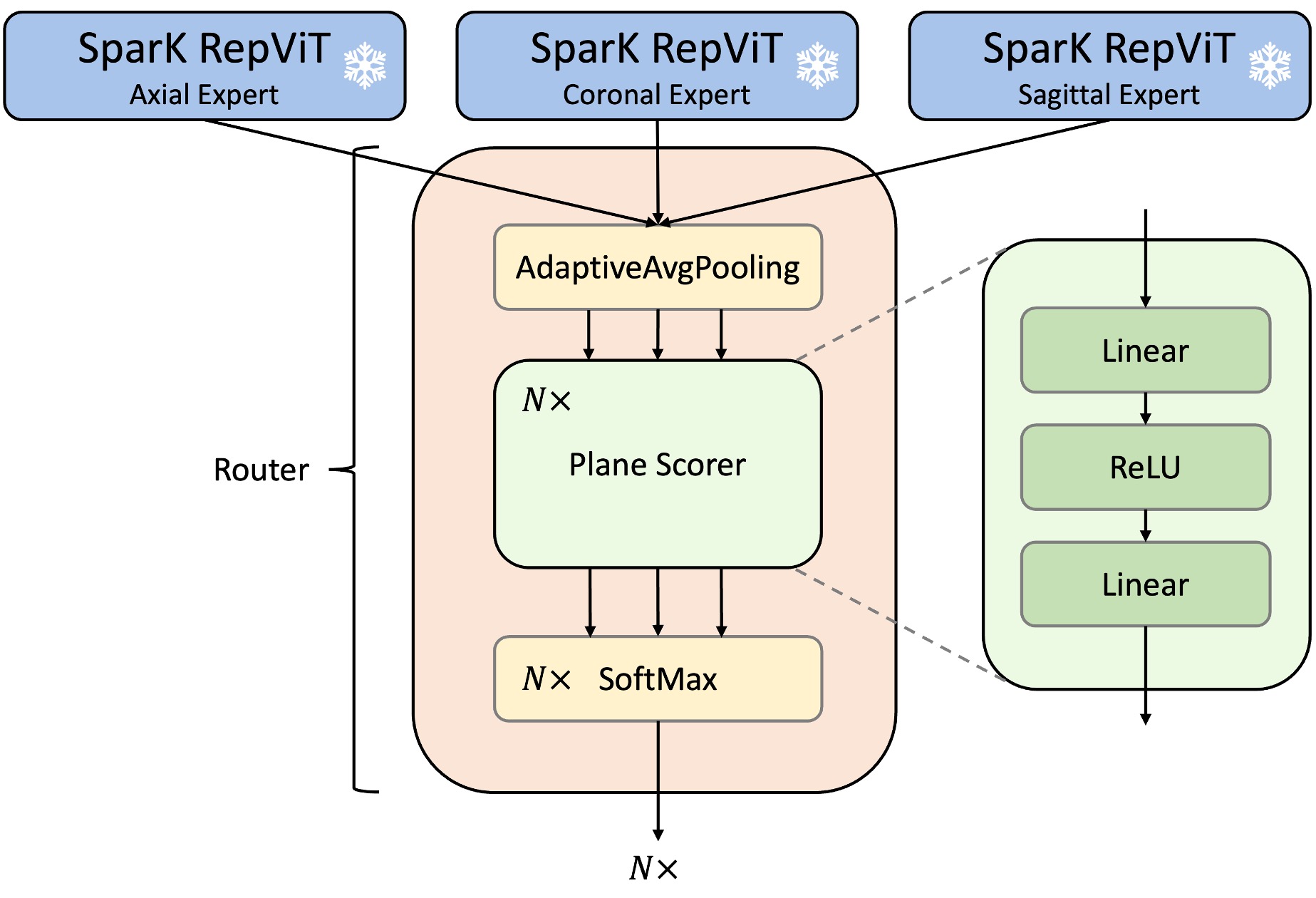

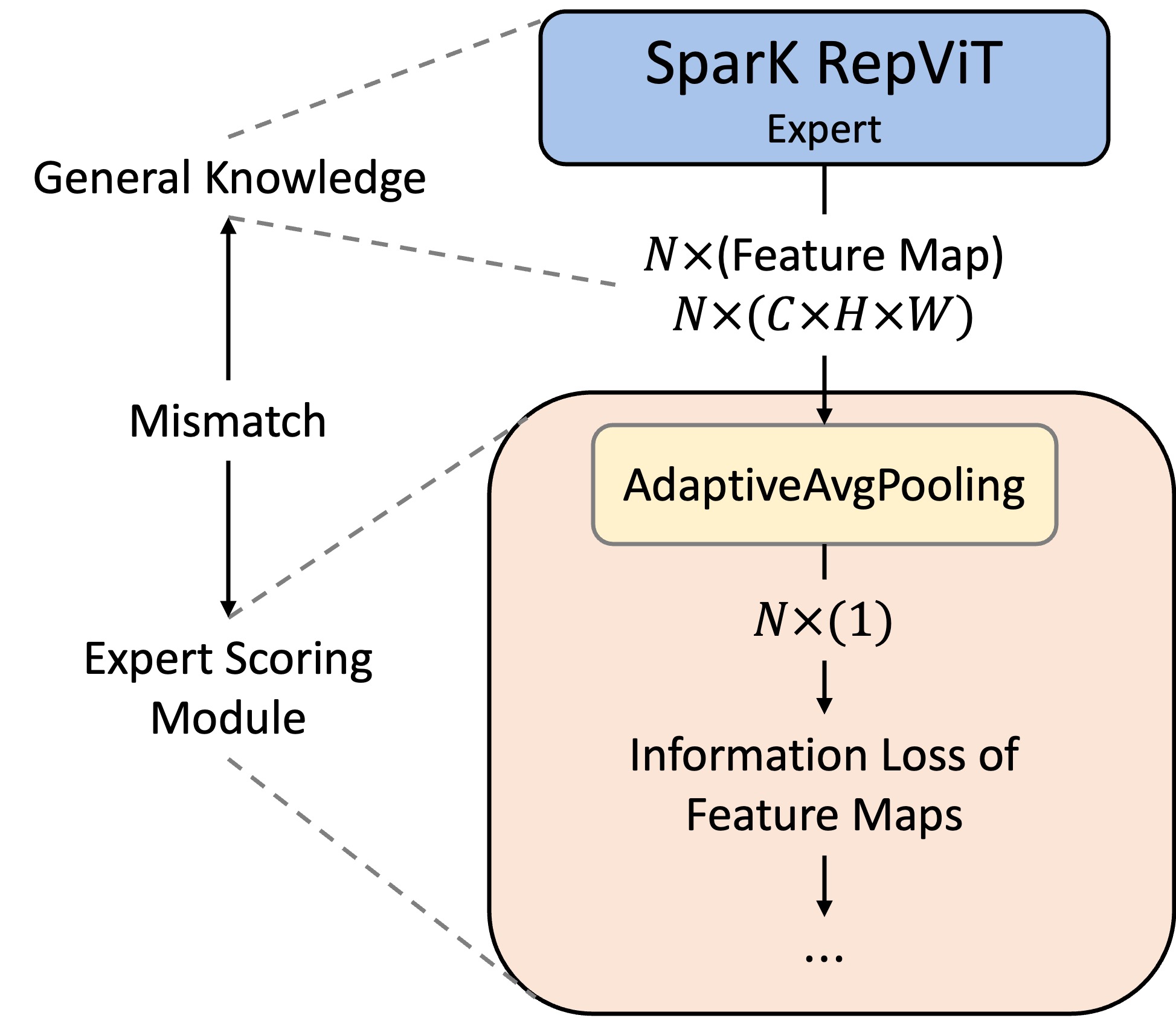

Router Module

\[\begin{align*} \tilde{F}_k^{(n)} = AAP(F_k^{(n)}(X)) \in \mathbb{R}^{C_n}, \end{align*}\]where \(AAP(\cdot)\) is adaptive average pooling layer. Then, the reduced feature maps are given to the score function thtat maps,

\[\begin{align*} & z_k^{(n)} = g_n(\tilde{F}_k^{(n)}(X)) \in \mathbb{R} \\ & \rightarrow \mathbb{z}^{(n)} = [z^{(n)}_1, z^{(n)}_2, z^{(n)}_3 ] \in \mathbb{R}^3. \end{align*}\]In this work, the score function \(g_n(\cdot)\) is replaced with two-layered simple linear layers with ReLU layer inplaced in between.

Then, the weights are measured for each scores of backbone models’ outputs as such,

\[\begin{align*} w^{(n)}_k = \frac{\exp{(z^{(n)}_k)}}{\sum_{i=1}^{3} \exp{((z^{(n)}_i))}}. \end{align*}\]The weight \(w^{(n)}_k\) decides which plane to give a higher importance than the other plane information. Given the weights, the router module controls the importance of each backbone’s output as follows,

\[\begin{align*} Z^{(n)} = \sum_{i=1}^{3} w^{(n)}_i \cdot F^{(n)}_i(X). \end{align*}\]

Experiments

Fitness Score

\[F = 0.1 P + 0.1 R + 0.3 AP_{50} + 0.5 AP_{50:95}\]The above uses precision, ** recall, **average precision, and Intersection-over-Union (IoU) metric to evaluate the model performance.

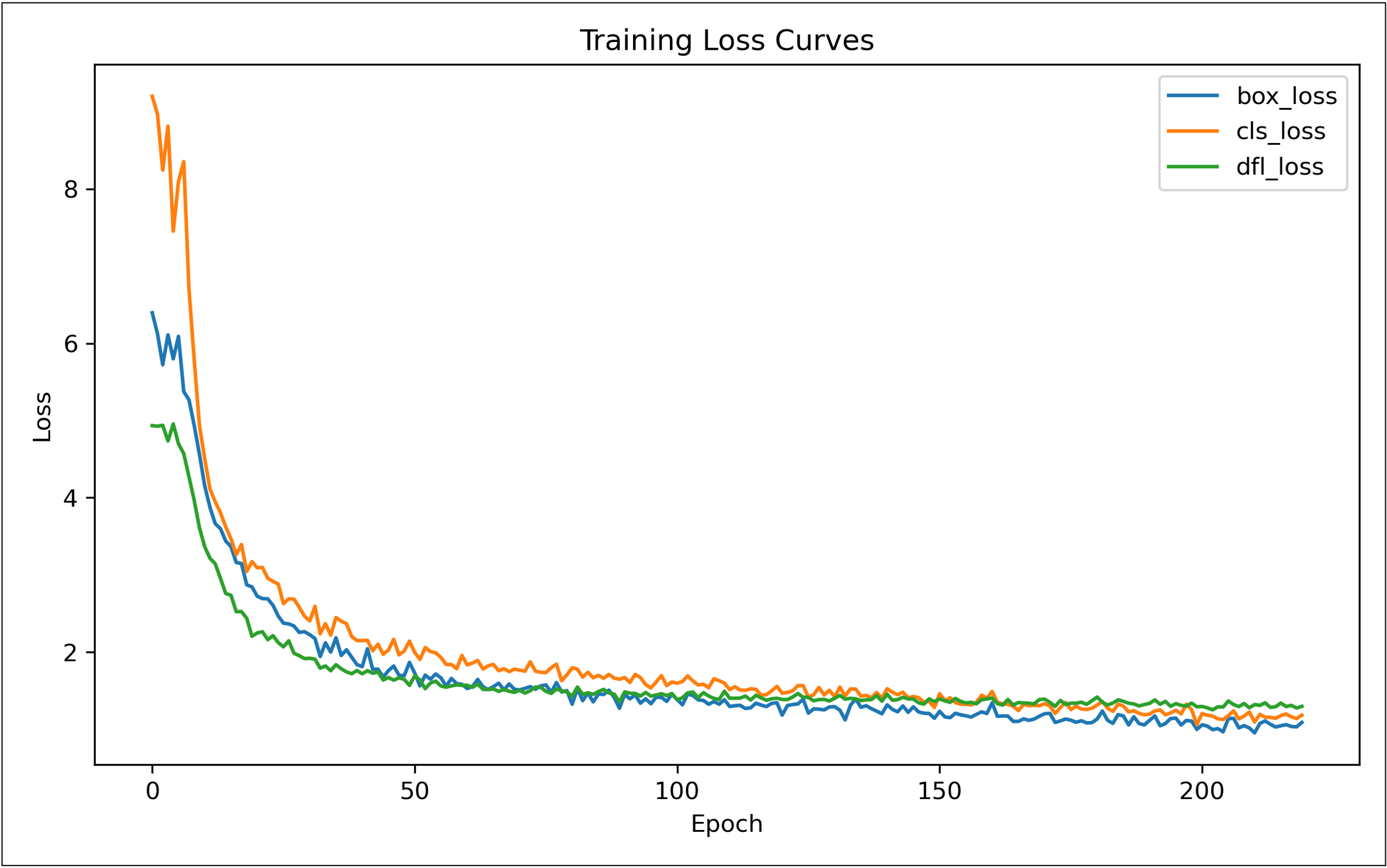

Training Losses

- Box loss: evaluates how accurately the predicted bounding boxes match the ground truth using an IoU-based measure \(\rightarrow\) penalizing poor localization.

- Objectness loss: supervises the model’s confidence in distinguishing object regions from background regions \(\rightarrow\) helping reduce both false positives and false negatives.

- Classification loss: measures how well the model assigns the correct class labels to detected objects \(\rightarrow\) ensuring accurate category discrimination.

Learning Curves

Performance Comparison

Ablation Study of Frozen Backbones

Analysis of the Result

- Effectiveness of Router Module

- Higher weight ratio to the plane that was given

- Lower weight ratio to the other planes, instead of 0

- E.g. given a coronal brain tumor image,

- The output should be something like \(Z = 0.2 F_{axi}(X) + 0.65 F_{cor}(X) 0.15 F_{sag}(X)\).

- Further studies on evaluating the router module are required

- Discrepancy in the connection

- Backbone outputs general knowledge of plane-specific brain tumor images

- Router module scores the importance of the information

- Average Pooling layer causes severe feature information loss

Conclusion

- Introduced three-backbone architecture

- Each was pretrained and specialized for the axial, coronal, and sagittal planes

- Employed a router module to fuse plane-specific features from the backbones

- Could not reach the state-of-the-art performance of PK-YOLO

- Demonstrated stronger performance in certain cases (i.e., axial plane)

- Achieved a 49.2% reduction in trainable parameters

- Training overhead was significantly reduced

Enjoy Reading This Article?

Here are some more articles you might like to read next: