Dynamic LION

Modifying the soft prompting into dynamic soft prompting

Introduction

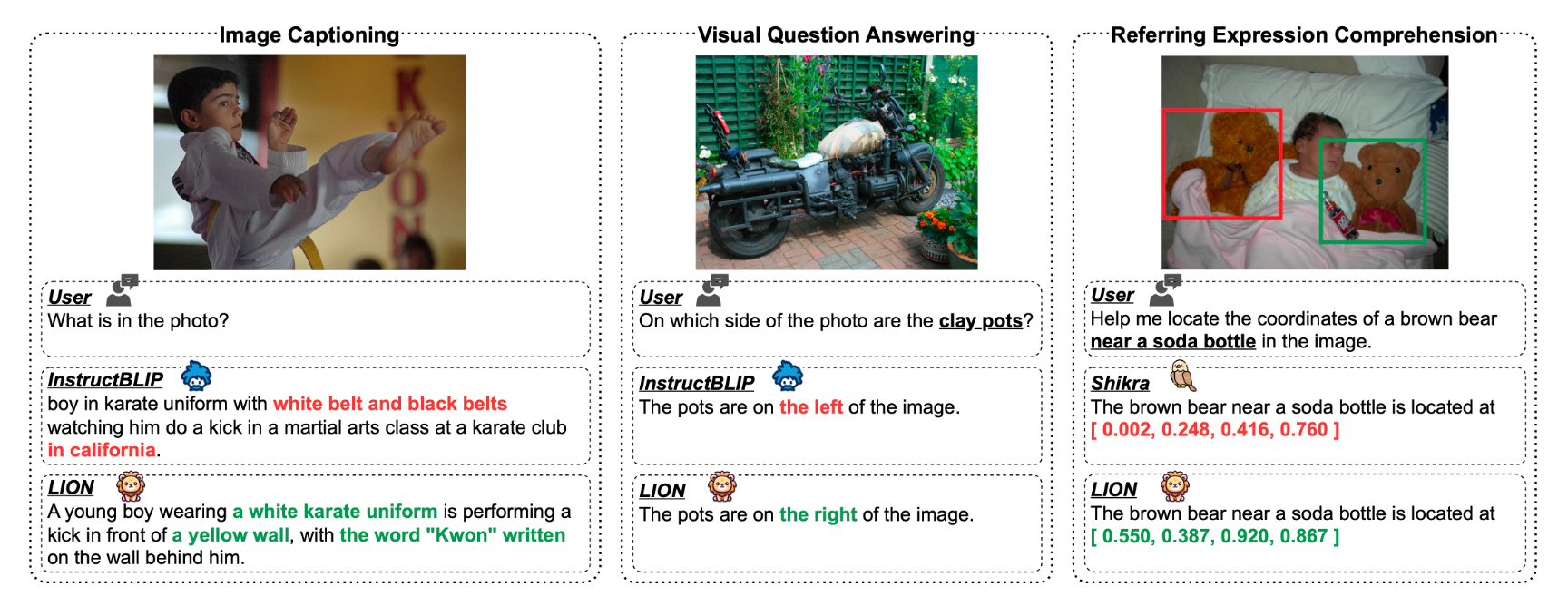

LION is a multimodal large language model that designed to handle three main vision language tasks, which are image captioning, visual question answer, and referring expression comprehension tasks.

LION takes an image and a task-specific prompt as inputs, and depending on the task, it produces either an image-level output such as caption or a VQA answer, or a region-level output, a bounding box for REC.

Figure 1. Overview of LION's tasks [1]. For image-level tasks, image captioning and visual question answering (VQA) are performed. For region-level task, visual grounding (locating the target object) is performed.

Limitations of the Previous Work

Two main concerns in the original work were identified which motivated the project method.

First is that the model’s high reliance on the off-the-shelf vision models, especially RAM. Image-level tasks are heavily dependent on the RAM’s performance, as image tags generated by RAM are typically imperfect. Also, this RAM-dependent architecture is the point where the authors pointed out as a limitations from the paper, which would eventually cause object hallucination.

Second is that the model relies on the soft prompting technique. Although there are some studies have conducted experiments with soft prompting, it is believed that its capabilities are still requiring more exploration, and its not yet fully established technique. These limitations inspired us to propose our method called dynamic soft prompting.

Method

Building on the limitations, the solution seems relatively straightforward. What if instead of relying on this static soft prompt solution, we changed it to a more dynamic solution?

Instead of using a single frozen vector for every image, we built a pipeline that generates a unique, context-aware prompt embedding for every single input.

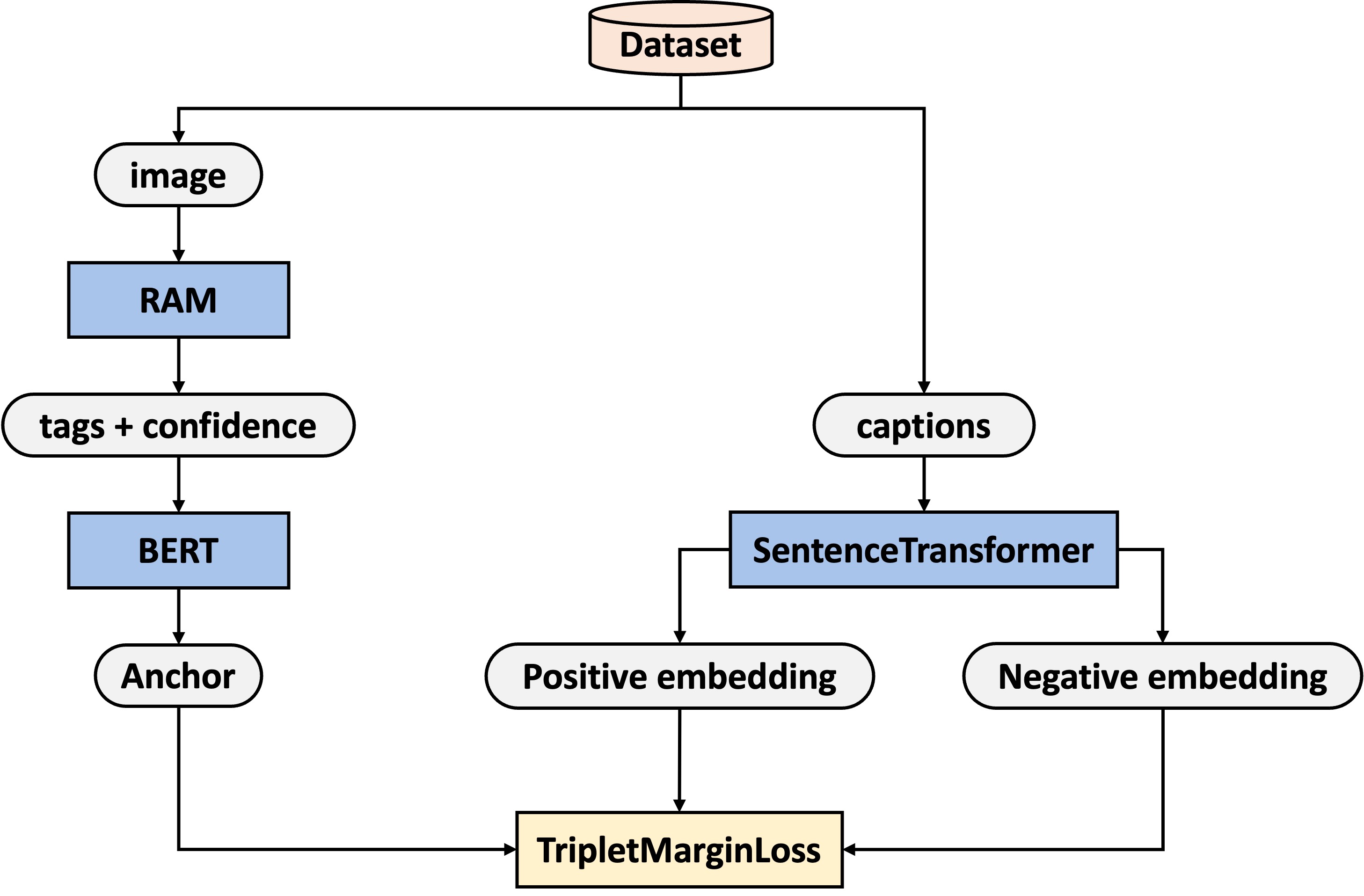

We can break down our approach into 3 different stages: First, we extract the tags and their respective confidence scores from the image using RAM Second, we use a fine-tuned BERT model to convert those tags into meaningful embeddings And third, we project and feed those embeddings into the lion model’s architecture

Figure 2. The dynamic soft prompt generation process [1].

BERT Pipeline

Taking a look at the BERT pipeline, the first step was to build a dataset to train BERT. So first, we modified the authors’ RAM code to not only extract tags, but also their confidence scores. The idea behind this is that it provides extra information for BERT to understand the importance of tags. For the dynamic prompt generation, we’re giving more importance to objects that the model is certain about and less attention to objects that could be hallucinations.

When it comes to the captions, our original dataset contained 5 captions per image. Since we only need one caption to decide which to pick, we chose to compute the average embedding of the captions using Sentence Transformer and pick the caption that is closest to this centroid. The idea behind this method is that this caption will likely be the most representative of the image itself and provide the most useful information.

So now that we have our tag string and our sentence embeddings, we can feed them all to our Triplet Margin Loss function. For this loss function, we must first define an anchor, a positive example and a negative example. For our particular dataset, the tag string was the anchor, and we defined the correct caption as the positive and a random caption as the negative.

Figure 3. BERT Pipeline.

Fine-Tuning BERT

\[\begin{align*} L(a, p, n) = max(d(a_i, p_i) - d(a_i, n_i) + margin, 0), \end{align*}\]where \(d(x_i, y_i) = \lVert x_i - y_i \rVert_p\).

- Anchor: BERT([tags + scores])

- Positive: \(Avg(BERT([image captions]))\)

- Negative: \(Avg(BERT([image captions]_{neg}))\), unrelated captions used

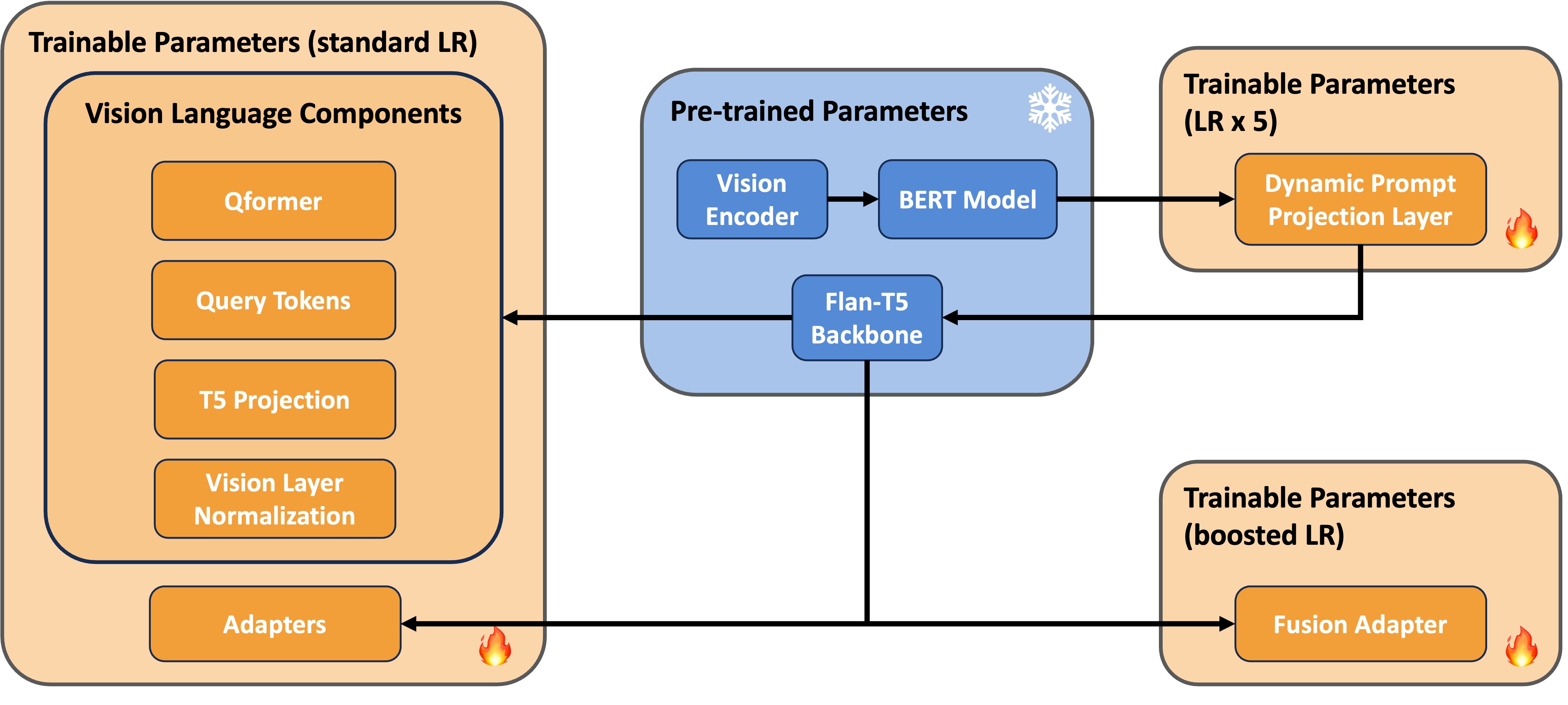

Parameter-Efficient Fine-Tuning

Another crucial change we considered was to use this technique called Parameter-Efficient Fine-Tuning (PEFT). Essentially, we are keeping most of the T5 parameters frozen, which includes the vision encoder and the Flan-T5 backbone. We only calculated gradients for other components, such as the dynamic prompt projection layer, the fusion adapter, and vision language components. We even applied differential learning rates, where we applied a much higher learning rate for our new projection layer to help learn faster. Since this new layer is initialized with random weights, it requires a much larger gradient update to converge quickly. The idea behind this is to avoid retraining the entire model, which would be computationally expensive. By freezing, we can take advantage of the pre-trained components that are already good at their job and just focus on training anything involved with the new additions.

Figure 4. Pipeline of parameter-efficient fine-tuning (PEFT).

Experiment

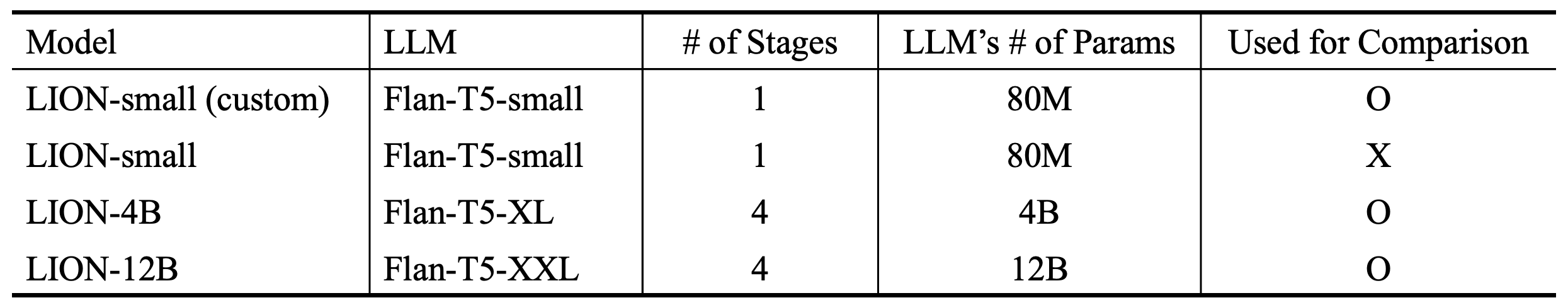

Setup

Table 1. Comparison of models for experimental setup.

BERT Fine-Tuning

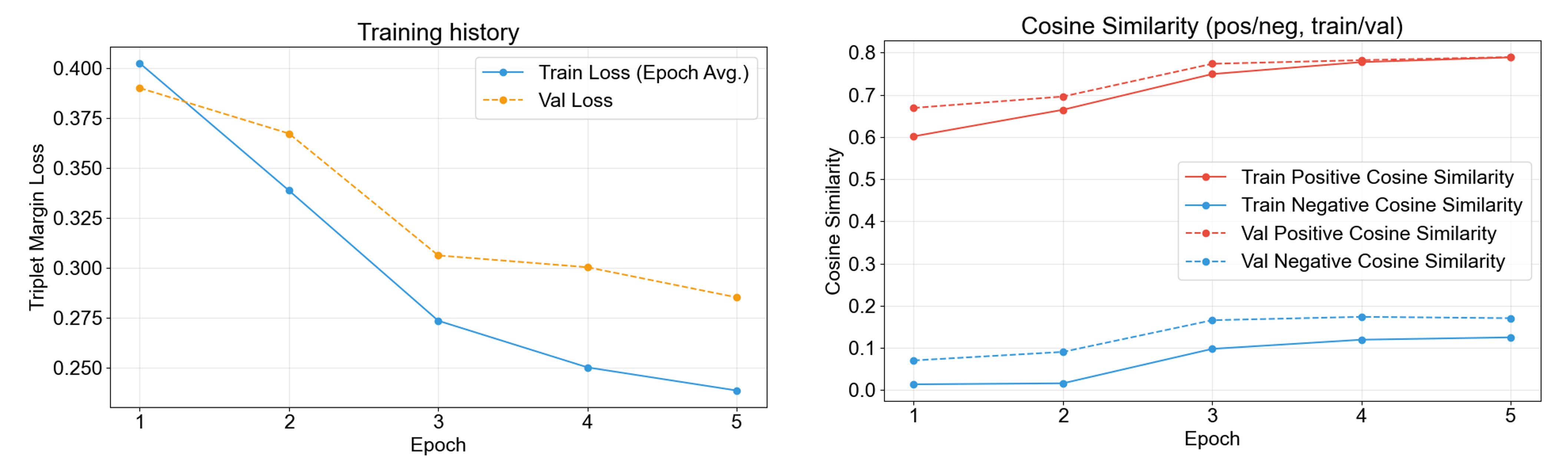

Now, looking at some of our results, let’s first take a look at our Triplet Margin Loss performed for our BERT model. So, what are these graphs telling us? Well, on the left, we can see a steady decrease in loss for both our training and validation. This is the first evidence that our model is learning and that our loss function works. The second piece of evidence can be found on this graph on the right. Here we are looking at the cosine similarity measured across both our training and validation sets. What this is telling us is that the anchors or the tag string are very similar to the image caption. And remember a score of 1 means that the vectors are pointing in the same direction, 0 means that the vectors are orthogonal (or perpendicular), and -1 means opposite. Our results show that anchor-positive pairs are getting scores of around 0.8, and anchor-negative pairs are getting scores of less than 0.2. What this effectively means is that this massive gap confirms that the push-pull loss function worked well and was able to create a distinct, separated embedding for the correct image context.

Figure 5. Training result of fine-tuning the BERT model used for dynamic soft prompt generation. (Left) Training loss and validation loss continually decreasing. (Right) Cosine similarity measured across training and validation set.

Training Result of LION

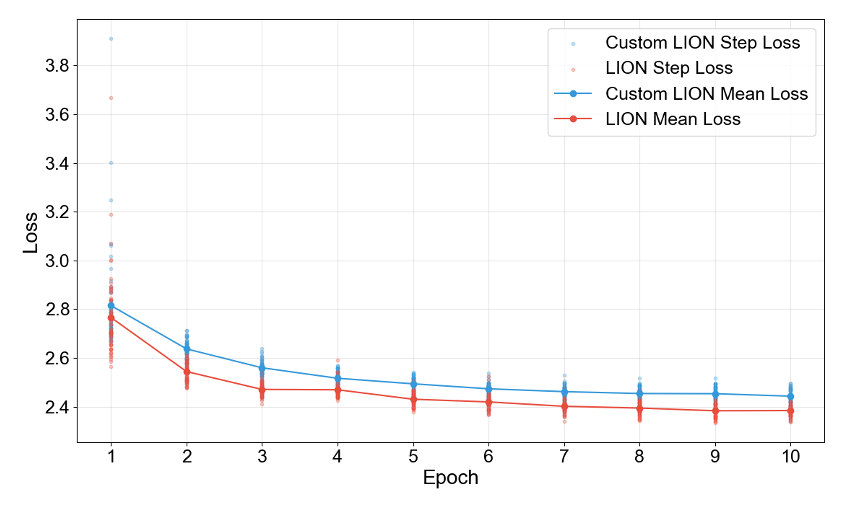

Given the completion of BERT’s fine-tuning, we have conducted a training of our dynamic LION model, comparing with the original LION model. Blue represents our dynamic LION, and the red represents the original LION model. As shown in the figure, while the learning curves are in the same convergence trend, the original LION shows less loss than ours, showing that the original LION outperforms our model.

Figure 6. Training result of LIONs, the original LION and the custom LION. Original LION shows better loss convergence, but have the same learning curve with the custom LION.

Evaluation on Image-Level Tasks

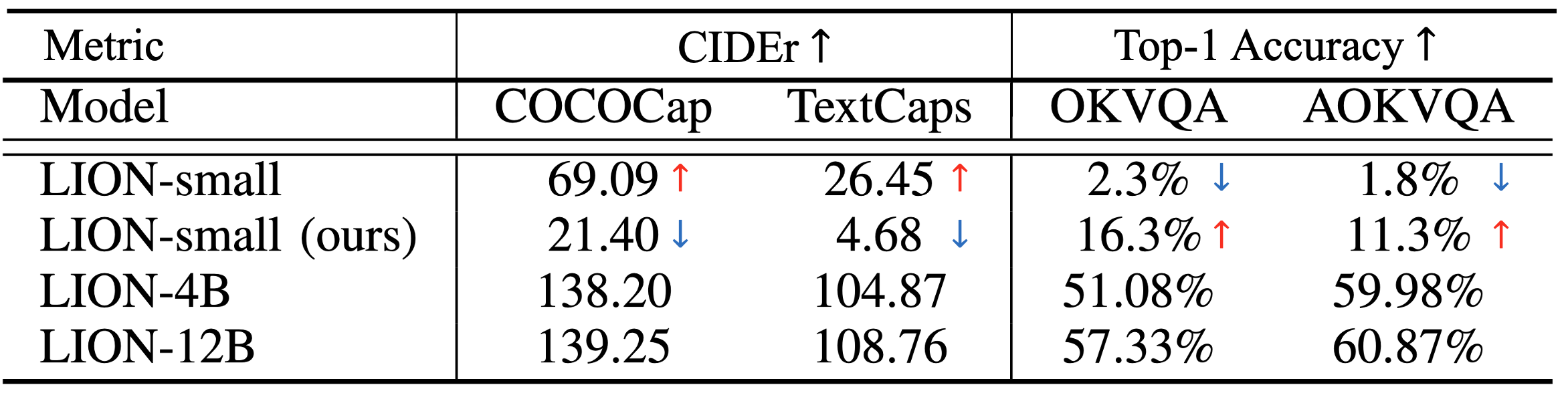

And we have conducted two types of evaluation, and the first evaluation is to test our model for image-level tasks. We used COCOCap and TextCaps datasets for image captioning task, and OKVQA and AOKVQA datasets for VQA task. As you can see from the table, original LION performs better in image captioning task, as previously shown from the learning curve. However, in terms of VQA task, our model ourperformed the original model with a huge gap.

Table 2. Comparison on image vaptioning and Visual Question Answering (VQA).

Evaluation on Region-Level Tasks

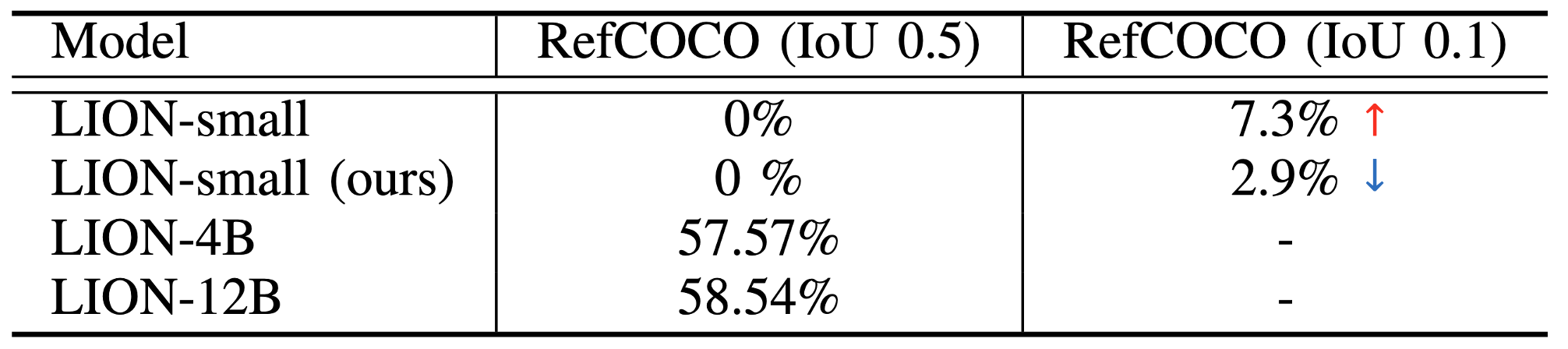

And the second evaluation we conducted is a region-level task, which is referring expression comprehension, REC, task. We used RefCOCO dataset to measure the IoU with threshold of 0.1 for both the original LION and our model. Unfortunately, ours didn’t perform better than the original LION as image captioning task.

Table 3. Comparison on Referring Expression Comprehension (REC).

Analysis of the Results

- Increased reliance on foundation models

- By adding more modules, BERT and Sentence Transformer, severity of LION’s reliance on external pretrained models increased even more.

- Discrepancy between Flan-T5 LLM and BERT

- Flan-T5 expects an instruction, however BERT is providing an image representation

- Loss of image information during linear projection

- BERT outputs a representation of images, but by forcing the output shape to match with Flan-T5 (using linear projection), caused a loss of image information.

- Layers frozen due to hardware limitations

- Although we applied PEFT to reduce training cost, several Flan-T5 layers still had to be frozen, due to our limited GPU resources.

- BERT enhanced the VQA Performance

- Specialized with image tags

- Confidence scores further improved with simple VQA tasks

Reference

[1] Chen, G., Shen, L., Shao, R., Deng, X., & Nie, L. (2024). Lion: Empowering multimodal large language model with dual-level visual knowledge. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 26540-26550).