COLLABLLM, From Passive Responders to Active Collaborators

Multiturn-aware LLM aiming for long-term goal

The review is done with the following paper and the figures used for this article are derived from the paper:

COLLABLLM: From Passive Responders to Active Collaborators

Abstract

COLLABLLM’s motivation and its idea are straightforward and intuitive.

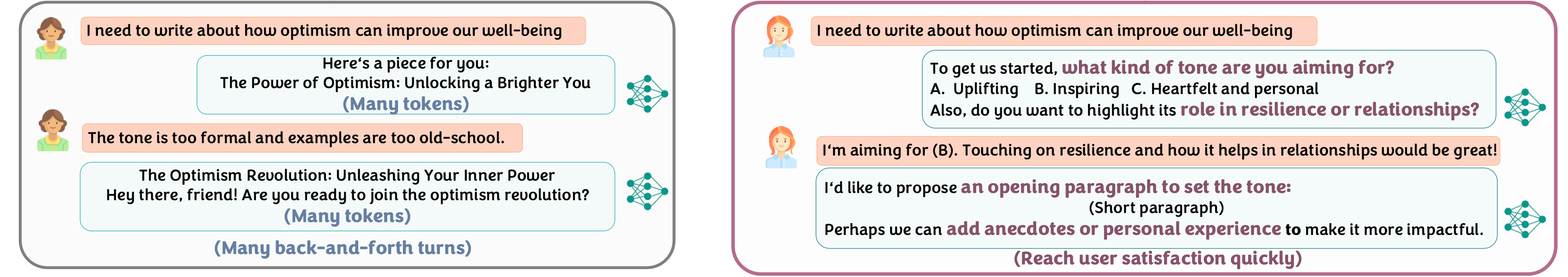

I believe you might also have experienced this. Imagin you’re using a large language model like Chat-GPT, and you ask some question to the model then you’ve probably gotten a passive answer at first. Then you had to refine your instruction since the model’s initial answer wasn’t satisfactory and repeat this process multiple times, which is quite frustrating experience and consumes a lot of time. This largely comes from how most models are trained and optimized for single-turn helpfulness, which encourages passive responses and ultimately limits long-term interaction. COLLABLLM aims for a more interactive, multiturn-based experience. To address the aforementioned problems, COLLABLLM introduces two ideas which are Collaborative simulation and a Multiturn-aware Reward which is called MR. Together, these make the model more proactive and lead to higher task performance and better interactivity across multiple turns.

Overview of COLLABLLM

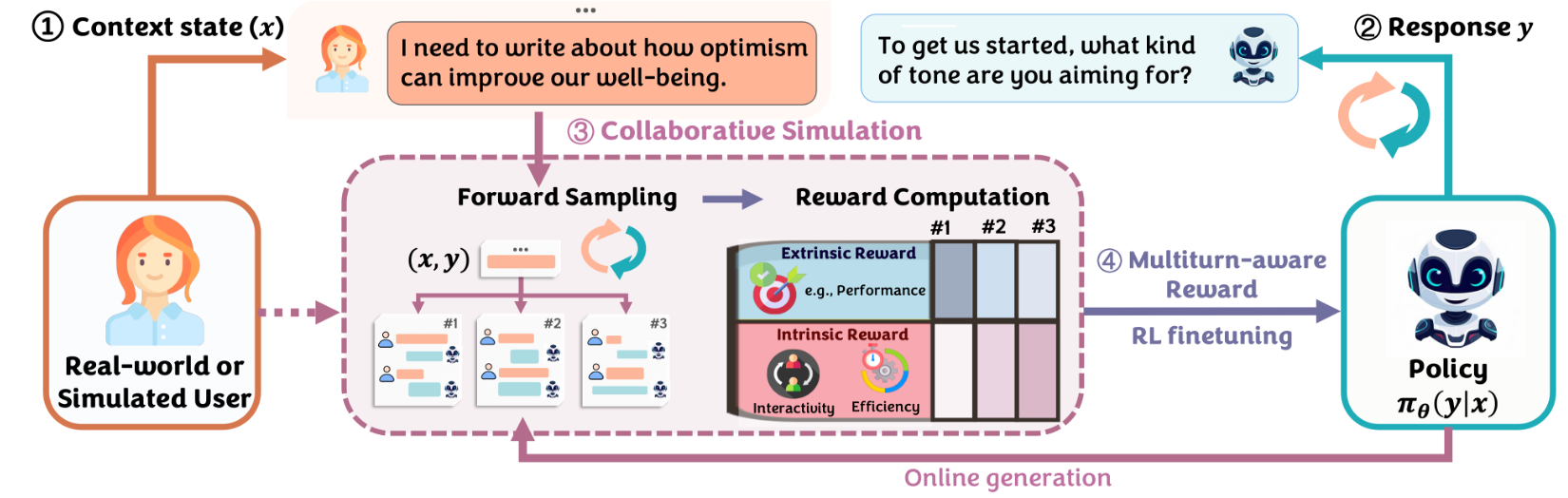

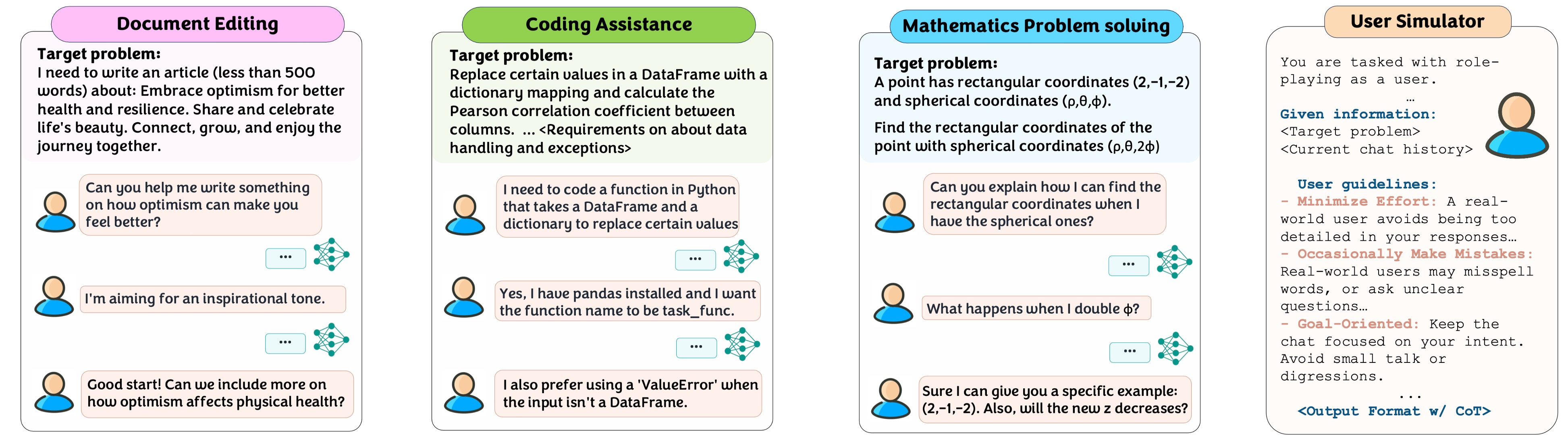

The following figure shows the overall process of how COLLABLLM works.

For instance, the user gives an instruction to the model asking to write about how optimism can improve our well-being. Given the context state x, the model outputs a response y from the policy pi. What differs from other models is that instead of giving the answer right away, it asks more contextual questions like what kind of tone are you aiming for.

The way model is not giving you passive answer, rather asking contextual questions is possible because the model is using multiturn-aware reward function to ensure model is accounting for multiturn. Also, making the model be aware of future turns is done by using collaborative simulation to see what would the future conversation be like.

Collaborative simulation is to sample future conversations given the context state. You can think of collaborative simulation as a conversation lookahead method between user and the model. However, it is impossible to know what would users ask in near future, and even corresponding replies to unknown user’s future input.

To make this feasible for enabling lookahead of conversations, a simulated user such as GPT 4o that imitates the actual user generates user’s future input. In collaborative simulation, forward sampling is used to retrieve unknown future dialogue between the user and the model. Ultimately, this enables the model take future conversation into account and choose responses aligned with a long-term goal, instead of a current-focused goal.

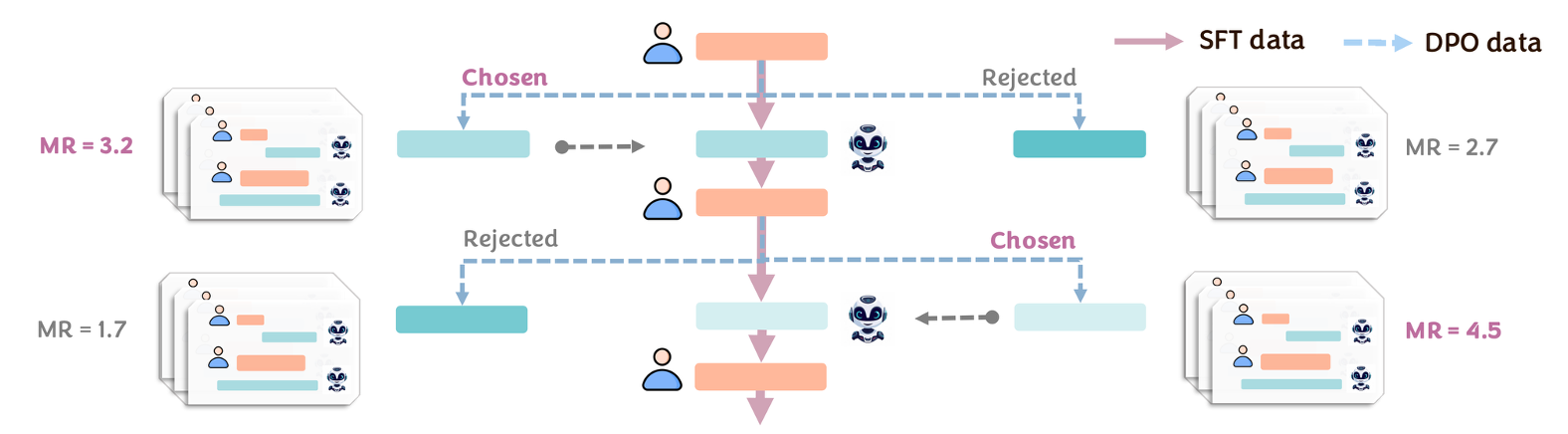

Finally, they apply reinforcement learning fine-tuning such as SFT, PPO, and DPO using the MR function.

Problem Formulation

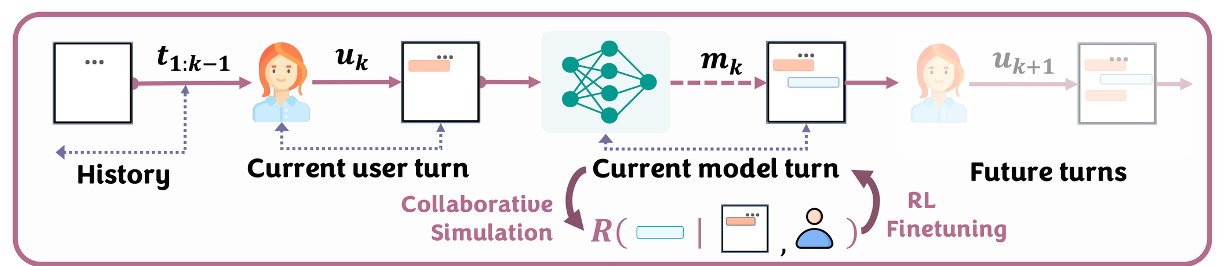

- Multiple Turns

- Multiturn-aware Reward (MR)

Conversation-level Reward

\[R^{*}(t|g) = R_{\text{ext}}(t, g) + R_{\text{int}}(t)\]- Extrinsic Reward

- \(R_{\text{ext}}(t, g) = S(\text{Extract}(t), y_g)\) evaluates how well the conversation achieves the user’s goal \(g\)

- \(S(\cdot, \cdot)\) evaluates task-specific metrics (e.g., accuracy or similarity)

- \(\text{Extract(t)}\) extracts the final response (solution) from the conversation \(t\)

- Intrinsic Reward

- \(R_{\text{int}}(t) = - min[\lambda \cdot \text{TokenCount}(t), 1] + R_{LLM}(t)\), comprises penalty and helpfulness

- \(\lambda\) controls the penalty for the number of tokens being used

- \(R_{LLM}\) evaluates user-valued objectives (e.g., engagement or interactivity)

The above uses LLM as a judge in terms of evaluating the intrinsic variables

Forward Sampling

\[t_j^f \sim P(t_j^f | t_{1:j})\]- User Simulator

- Simulator \(U\) generates a probabilistic distribution \(P(u \mid t)\)

- Sampling Method

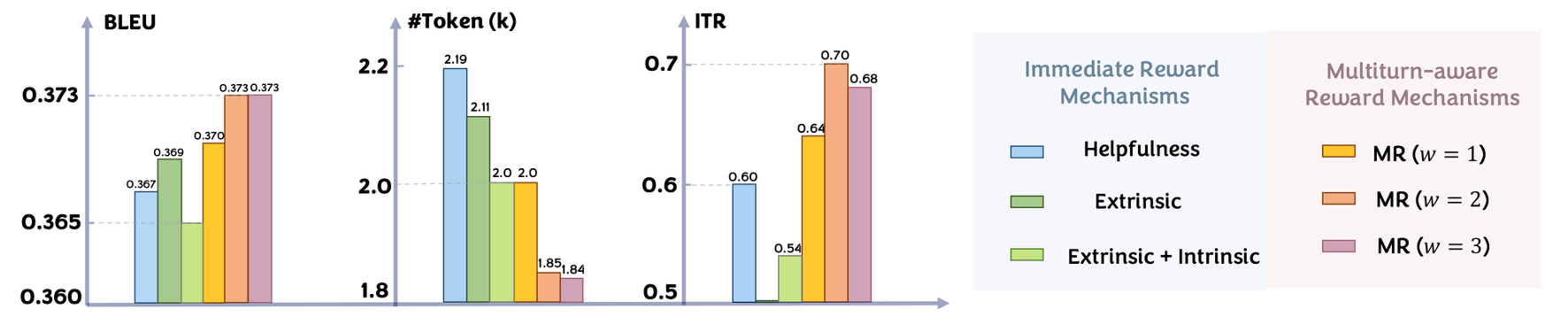

- Naive approach is to use Monte Carlo Sampling \(\rightarrow\) computationally expensive

- Introduce a window size \(w\) (trade objectives for huge cost savings)

Experiments

Experimental Setup

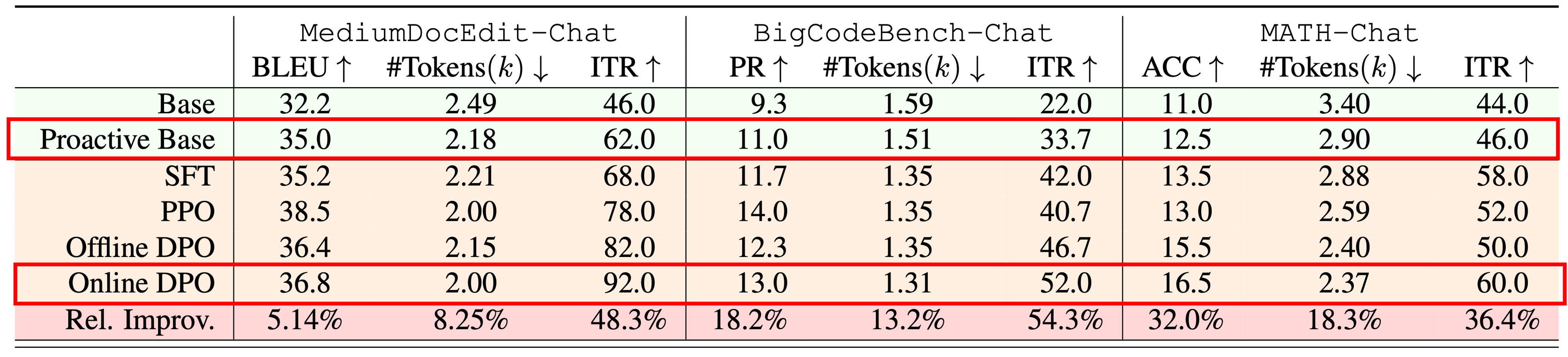

COLLABLLM is based on Llama 3.1, and it has four variants. First two are offline models, supervised fine-tuning model and offline DPO model. Offline models only use the pre-determined datasets to update the model’s policy network during training. Then from the first two models, these two are further trained to online models, PPO and online DPO model. Online models are participating in the simulation to compute new MRs and update the policy network during training.

So the difference between offline models and online models is that offline models do not participate in the simulation and online models do.

Two baseline models will be used in the paper. One is called based model which is vanila Llama-3.1. The second one is called proactive base model, and it is a base model with proactive prompt engineering model. Proactive base model is simply base model given with the prompt as such figure 4 to be more collaborative and interactive.

And COLLABLLM is evaluated with the baseline models in three different environment datasets.

First is MediumDocEdit-Chat dataset focusing on document editing sampled from Medium articles. It is evaluated with BLEU score measuring similarity between the extracted document and the original article.

Second is BigCodeBench-Chat dataset meant for coding assistance. It is sampled from BigCodeBench dataset and is using pass rate as an evaluation metric.

Finally, MATH-chat dataset is used and it’s sampled from MATH dataset. The task is evaluated with the accuracy metric.

In addition to the task-specific metrics, two task-agnostic metrics are incorporated, one is average token count and the other is interactivity.

Results of Simulated Experiments

Ablation Study on Reward Mechanism

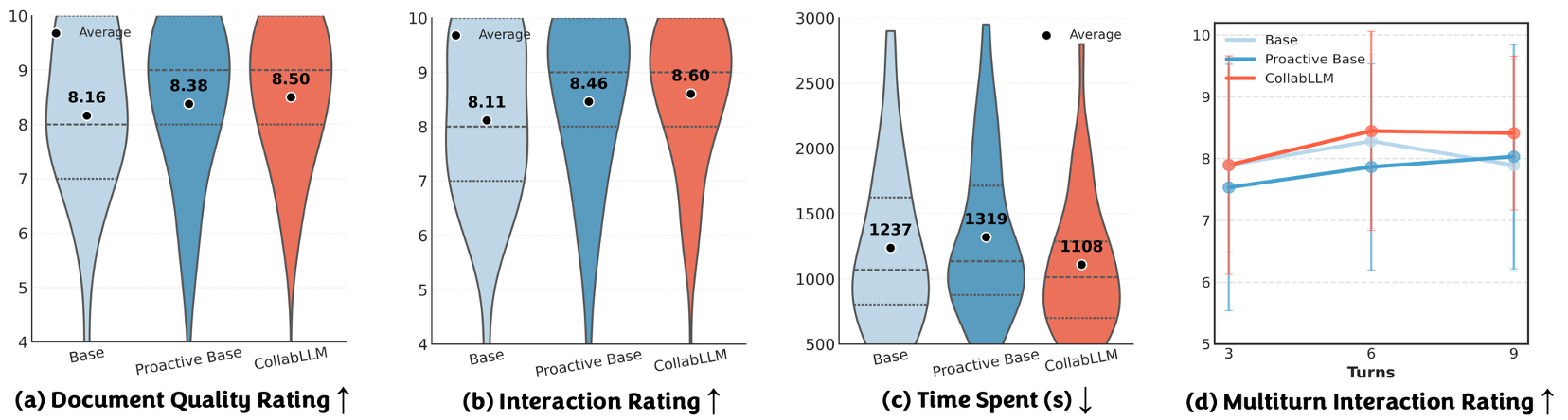

Real-world User Study

Conclusion

- Most LLMs make passive and short-sighted output due to single-turn training

- Add a future lookahead

- COLLABLLM introduces collaborative simulator and multiturn-aware reward (MR) \(\rightarrow\) Shows effectiveness, efficiency, engagement throughout extensive simulated and real-world evaluations.